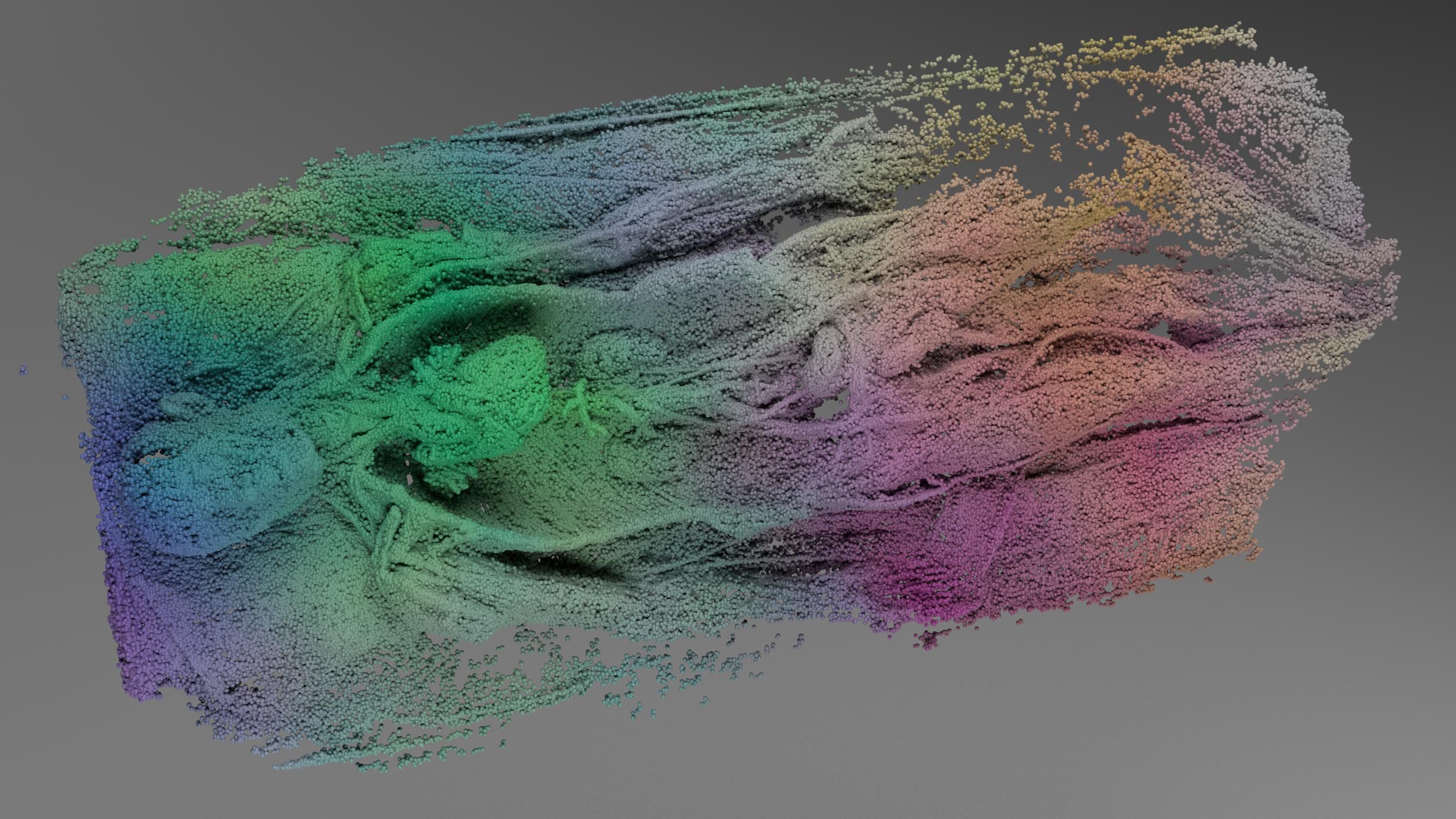

Adam Fung & Nick Bontrager (“drone beuys”)

x-ray specola

digital animation created using LiDAR scans of wax anatomical models at the La Specola natural history museum. LiDAR data is processed with generative noise used to gently disrupt the scan for undulation. Sampled audio was produced with a sensitive omnidirectional geophone to capture low-frequency vibrations.

Sam Jackson

Wet Opening

VIEW GENERATIVE ARTWORK > >

Generative artwork leveraging a custom rendering engine built using Javascript / WebGL / HTML. Infinite duration, also presented as a 4k video excerpt. Emergent organic forms are suspended in tension with the schematization of the grid, evoking the systemization of life in the anthropocene.

S4RA

privacy-GrDN.info

digital animation that explores a hybrid emulation that deals with coding as the backbone structure of a semiotic container.

Arden Schager

Fields

VIEW INTERACTIVE WEBSITE > >

Multiplayer microsite reimagining the text input field as part of a community garden where participants can only type plant-based emojis. Fields explores notions of shared digital space, ambient computing, and the limits of symbolic language.

Colin Ives

Mud Maps

This project uses AI-generated video trained on footage of volcanic mud pools from Iceland. The process allows map shapes to emerge in the microcosm of the volcanic pool. Base code: Pix2Pix. NVIDIA Corporation: Ting-Chun Wang, Ming-Yu Liu, Jun-Yan Zhu. Modifications: JC. Tech workflow: Holly Newlands.

Michael Betancourt

Five Bodyscapes

These animations use generative glitches made by AI (LoRA) models interacting to cancel each other’s work that function as guide images (keyframes) for the animorph that produces the movie, in the process transforming the static AImages so they no longer appear in the finished movie.

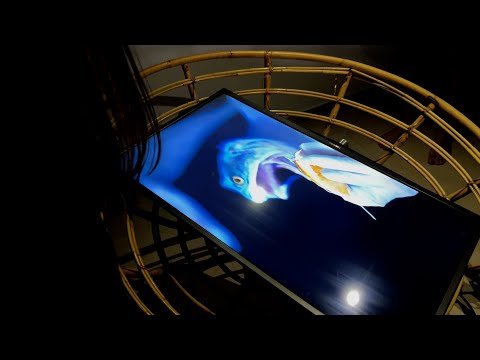

Sara Bonaventura

Iconoplast 360

Interactive 360 degree animation with CGI of 3D scanned props from recycled plastic injection molding, exploring deep time, dark ecology and viscous postnatural matter. In collaboration with ZeroWaste backBO, FabLAb TV, Donald Dunbar.

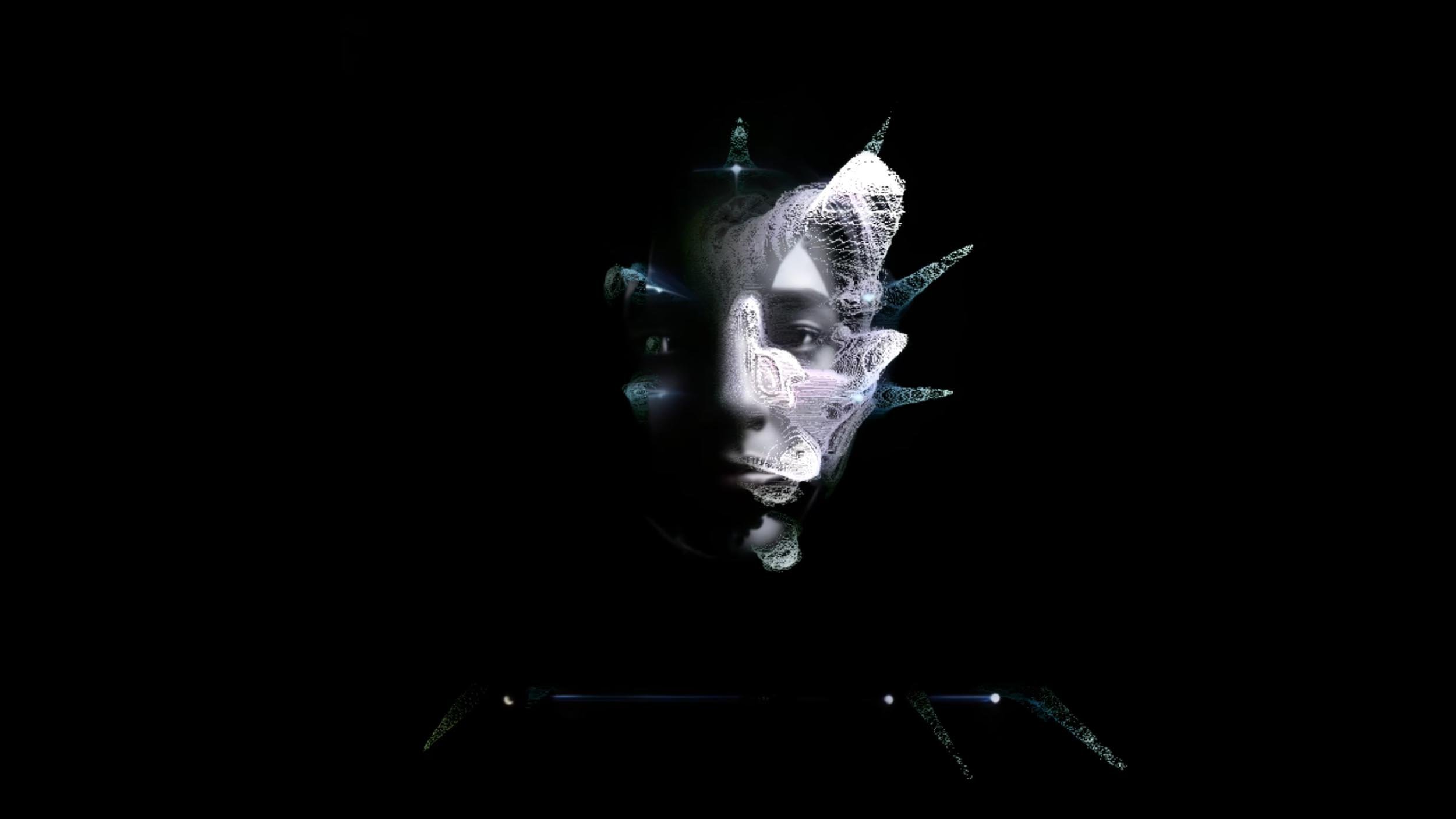

Petra

About the other us

Digital performance created with Real-Time AI Face Tracking, Audioreactive Point Cloud and SuperCollider Synthesizer, exploring the intersection between the human and the digital through a cybernetic dance that redefines the authenticity of the body in the synthetic age.

Elina Zazulia

Vegemeral

Audiovisual project created with neural networks (GEN2), blending AI-generated visuals with fleeting botanical movements.

Melanie Olde

Fern

Hand-woven paper and monofilament cloth with the structure generated from plant growth model algorithms, processed and translated through digital applications, and woven using a loom into an emergent organic 3-dimensional form.

Ho Yi Dan

Ebbs and Flows

digital installation combining human interaction, sensors, and AI generated visuals, exploring movement as an interface for image creation, reflecting on the delicate balance between human-marine life and our shared vulnerabilities in climate change

Gabriele Walter

Murmuration/ It's like a droneshow #01

Video stills of starling swarms, zoomed, digitally arranged to an abstract new swarm. Inspired by swarm intelligence in nature, I investigate the risks of autonomous AI systems, their swarm behavior and the emergent patterns in collective actions.

Joshua Albers

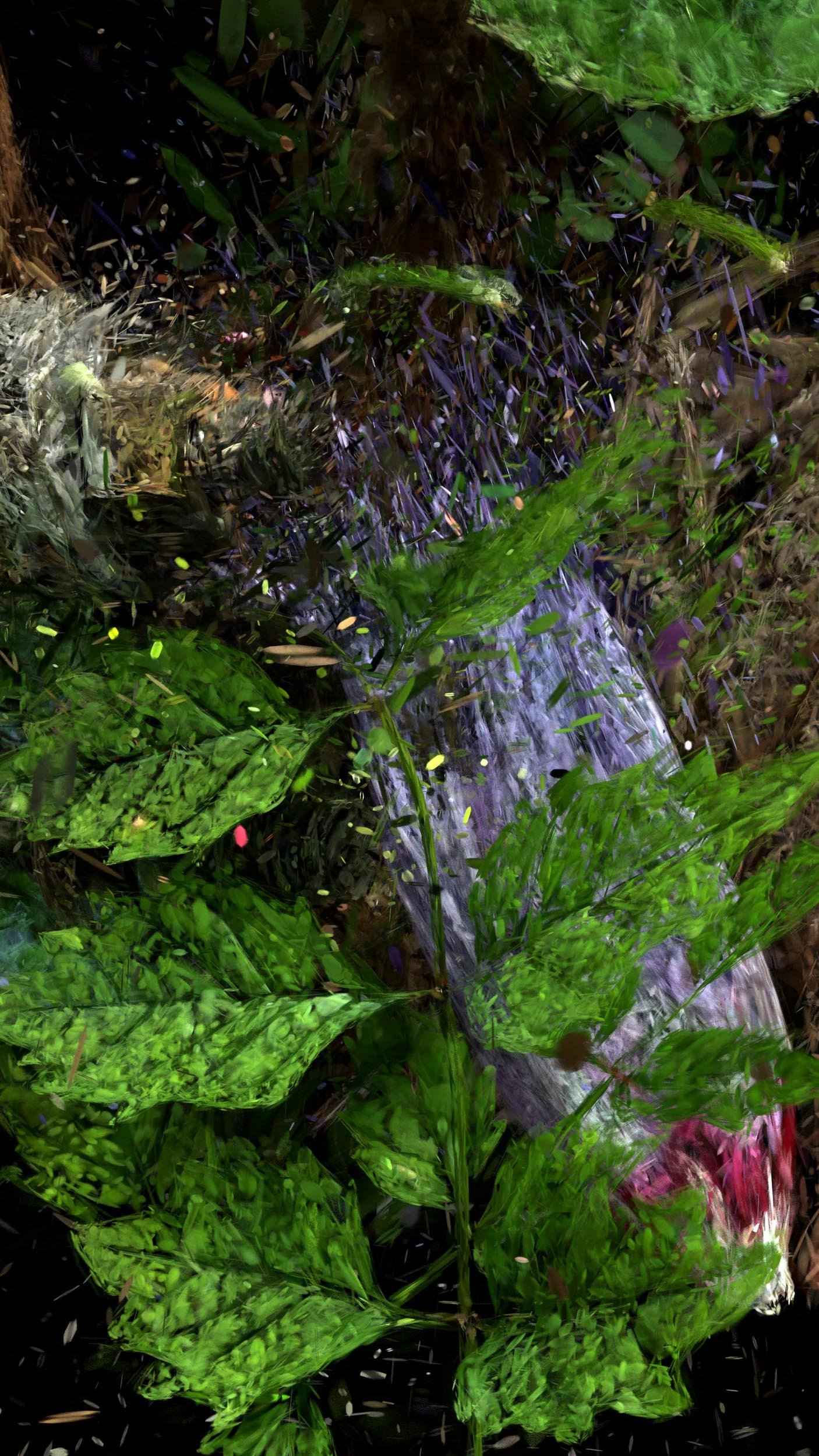

Interference 01

Digital video loop created from manipulated 3D Gaussian splats of local plants and infrastructure. Contemplating the ways species shape their surroundings.

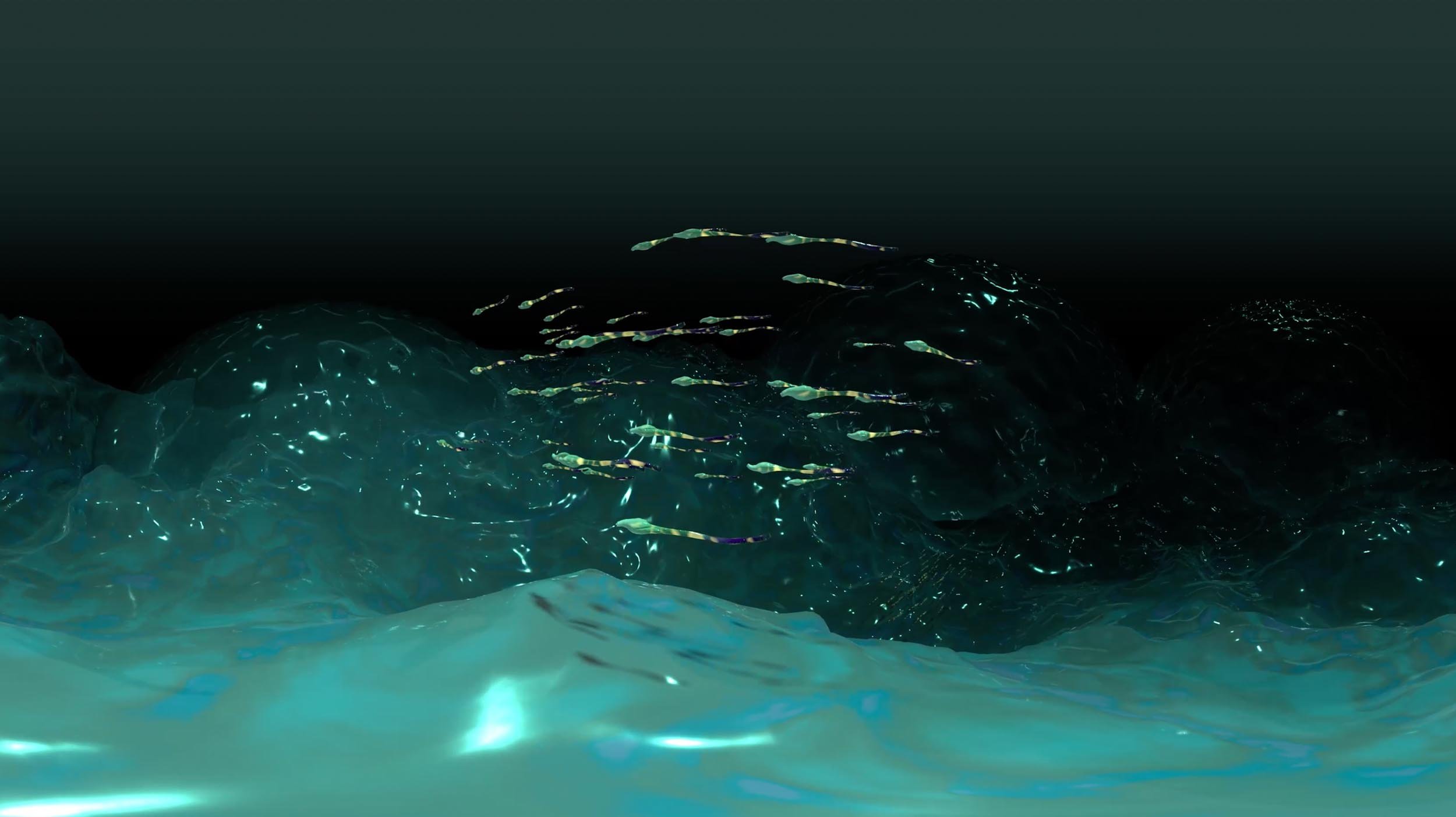

Ong Kian Peng

Sky River

3D Animation that imagines a future where climate change has radically transformed our landscape and the collateral damage it caused to the hydrological cycle.

Katina Bitsicas

Cyprinus carpio L.

Video created with layered overhead projector transparencies of microscopic imagery of glyphosate infected carp combined with water allowing the viewer to confront the mortality of the fish as they encounter the imagery at a larger-than-life scale.

Jan Swinburne

TIME BREATHES

moving image work derived from a still image of an audio waveform of my voice speaking the word TIME

Quin de la Mer

Heaven in a Wildflower

Using the phytogram filmmaking method, a desert wildflower petal provided the internal chemistry to create prints on outdated, undeveloped 35mm black-and-white film. This digital image explores a microcosm’s ability to echo the cosmos.

Adrian Pijoan

Epiphyte

Live visualization in Unreal Engine 5 created using bioelectrical signals from a plant connected to custom electronics within a laser cut enclosure exploring ideas of the interior dream life of plants and of technology created for nonhuman entities.

Wes Viz

Digitally Rewilding The Westfjords

In 'Digitally Rewilding the Westfjords' I explore the concept of creating an immersive 3D environment to reimagine the landscape and decolonize the local vegetation. Created using real-world satellite heightmap data. No AI was used at any point.

Sana Maqsood

Waves of Us: A Biofeedback Installation

Documentation of sonic experience that aims to focus on a unique moment when two human minds come together to create a multisensory experience using brainwaves data, this can only be created in this exact moment in life.